Google Assistant will open up to developers in December with 'Actions on Google'

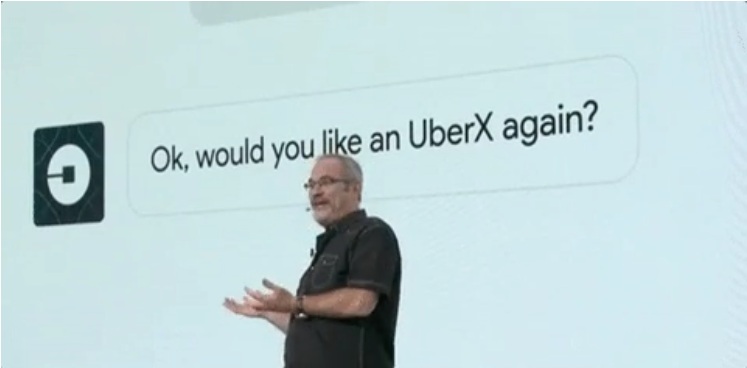

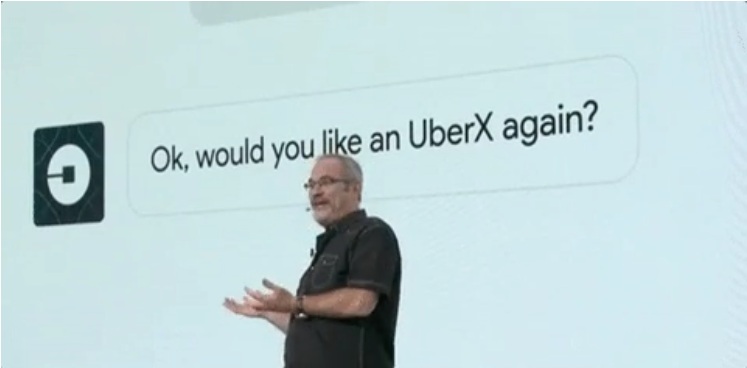

Google's not just taking hardware inspiration from Amazon with its new Google Home voice-controlled speaker, it's going to challenge Amazon on third-party software developer integration as well. That might not sound thrilling, but seeing how Amazon recently announced it has over 3,000 "skills" for Alexa, there's a lot of ground to make up for any potential challengers to Echo's throne. Google will need a lot of buy-in from software developers, service providers, and publishers to make Google Home a compelling alternative. Today Google announced it will launch its Actions on Google program in December, which will allow developers to build "Actions" for Google Assistant. These will come in two flavors: Direct Actions and Conversation Actions. Direct Actions are what most interactions with Siri and Alexa (and Google's existing Android Voice Actions) are like right now: ask for some information, get an answer. Ask to turn off the lights, the lights turn off. Ask to play [that song by that indie pop group you licensed for today's keynote] and it plays. Conversation Actions, in contrast, are more back and forth. On stage, Google's Scott Huffman demonstrated one such "Conversation":

The vague Uber request results in Google Assistant passing the conversation off to Uber to clarify and finalize. To me this seems preferable to memorizing a complex "command" to input all at once. But to do this well, developers will have to develop some intelligence into their own responses — something Google is happy to help them do through its own "conversational UX" services like API.AI. What's most important to note is that these Actions have the potential to play in a lot more places than just Google Home. Google Assistant's presence in Allo (Google's new messaging app) and Pixel (Google's new phone) could give developers that "write once run anywhere" reach they crave. Additionally, Google also promises to launch a Embedded Google Assistant SDK, which will allow tinkerers to load Google Assistant on a Raspberry Pi, and hardware manufacturers to include Assistant in products — something that's already happening in the Alexa ecosystem. The war for your casual vocal queries has really begun. Source : http://www.theverge.com/2016/10/4/13164882/google-assistant-actions-on-google-developer-sdk

The vague Uber request results in Google Assistant passing the conversation off to Uber to clarify and finalize. To me this seems preferable to memorizing a complex "command" to input all at once. But to do this well, developers will have to develop some intelligence into their own responses — something Google is happy to help them do through its own "conversational UX" services like API.AI. What's most important to note is that these Actions have the potential to play in a lot more places than just Google Home. Google Assistant's presence in Allo (Google's new messaging app) and Pixel (Google's new phone) could give developers that "write once run anywhere" reach they crave. Additionally, Google also promises to launch a Embedded Google Assistant SDK, which will allow tinkerers to load Google Assistant on a Raspberry Pi, and hardware manufacturers to include Assistant in products — something that's already happening in the Alexa ecosystem. The war for your casual vocal queries has really begun. Source : http://www.theverge.com/2016/10/4/13164882/google-assistant-actions-on-google-developer-sdk

Pritesh

Pritesh Pethani